In the previous article we have discussed a very basic architecture to host a web application and how the web request cycle is carried out. In this article we will modify the architecture to set a starting point for hosting an application when a high volume of traffic is expected.

A single server has limited resources and when bombarded with millions of requests, it is bound to choke. In order to handle such a high volume traffic, resources must be added to the existing setup. This process is called scaling.

Series Articles

Types of Scaling

When it comes to hardware, there are two types of scaling.

- Vertical Scaling: When resources are added to the existing hardware e.g. RAM, CPU or hard disk.

- Horizontal Scaling: When nodes are added to the existing pool of nodes.

In this article we will focus on horizontal scaling and add nodes and relevant components to make the architecture work.

Upgrading The Web Application Infrastructure

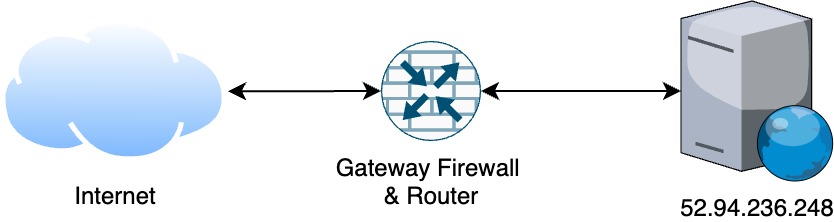

We started with an infrastructure as shown:

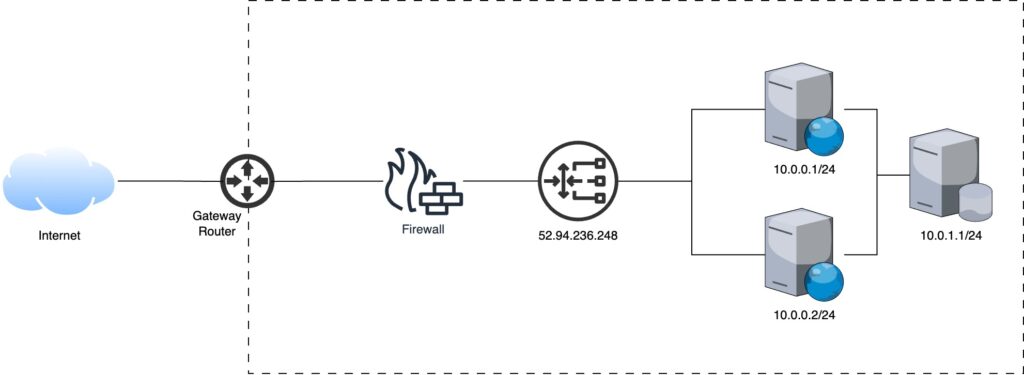

Our goal is to scale the environment horizontally by adding nodes for the application to run and a mechanism to control the traffic to each node. We call that load balancing and can be achieved with a load balancer either a hardware or software.

Load balancer acts as a traffic proxy and distributes network or application traffic across endpoints on a number of servers running the same code base of the application. The load balancer distributes traffic based on algorithms such as:

- Round robin

- Threshold

- Random with two choices

- Least connections

- Least time

- URL hash

- Source IP hash

- Consistent hashing

Web Request Cycle

With the upgrade in the infrastructure the web request cycle changes slightly. The load balancer receives all the web requests and distributes them amongst the pool of resources. Step 3 of the previous article introduces this change.

Step 3: Transport Control Protocol (TCP)

The SYNC message from client reaches the load balancer, it decides which node this new packet should go to based on the algorithm discussed. The load balancer decides that the message should go to the first node (10.0.0.1/24). The server responds and subsequent packets follow the same route i.e. communicates with the first node.

Leave a Reply